Software testing serves to “pin” features in place — providing assurance they are not broken by future changes.

Our industry has reached a consensus that testing is essential for software quality. This post explores an underappreciated aspect of software testing, and how it can be leveraged beyond the development environment.

Frontend testing offers a view of software health that is closest to the end user’s experience. If a frontend test fails, you can be sure something is broken in your application. But a failed frontend test doesn’t provide much insight into what is broken.

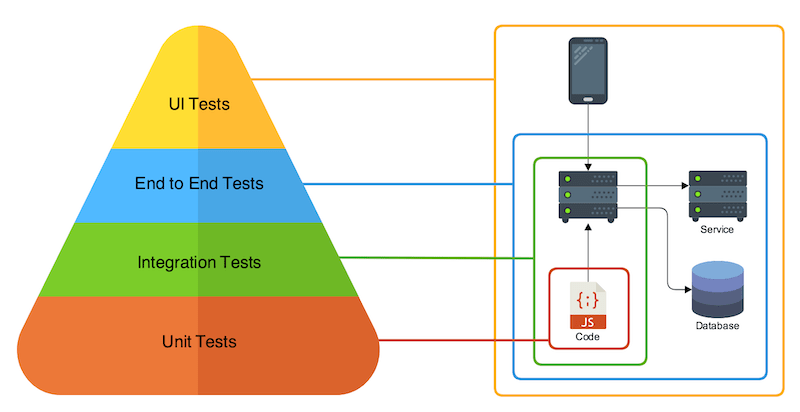

As Nathan Peck points out in his post on the “the testing pyramid”, frontend tests (AKA: UI Tests) are just the tip of the pyramid. Lower-layer tests provide more visibility into the causes of failures, and most importantly, they are not as “brittle”. Frontend tests are considered brittle because they must be updated most frequently, and view the system as a whole rather than decomposed parts.

Frontend: The Swiss Army Knife of Testing

I agree that frontend tests are wholly insufficient for ensuring code quality. I get why they are generally disliked throughout the software development lifecycle. As a developer, I recognize how frustrating it is to update dozens of tests for each update. And as a long-time Ops engineer, I tried to stay away from frontend tests like the plague.

However, I think the true value of frontend tests has gone unrecognized. Sure they are useful from development through the CI/CD pipeline, but they are also incredibly useful in production! There is a huge missed opportunity in not re-using frontend tests for production observability!! Gain deeper insight into your production application's health — an additional return on an already-booked investment.

Sadly, all too often testing is considered the domain of software engineers. After all, operations engineers are supposed to focus on observability, not testing… right? Wrong!

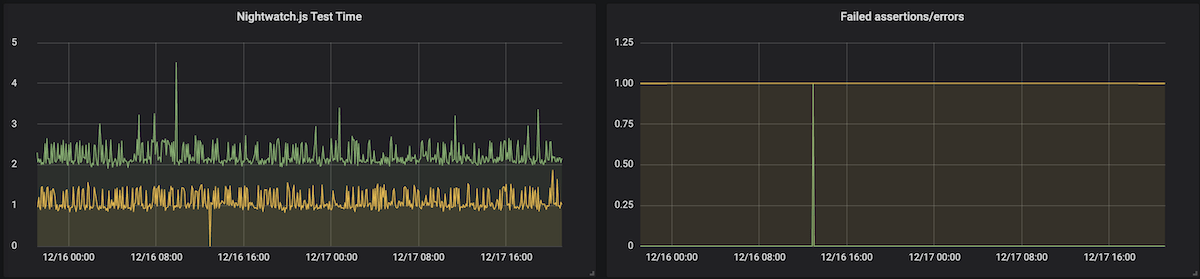

In this post, our goal is to leverage frontend browser testing for production observability and monitoring. We’ll use nightwatchjs_exporter to capture the results of Nightwatch.js tests with the Prometheus monitoring tool.

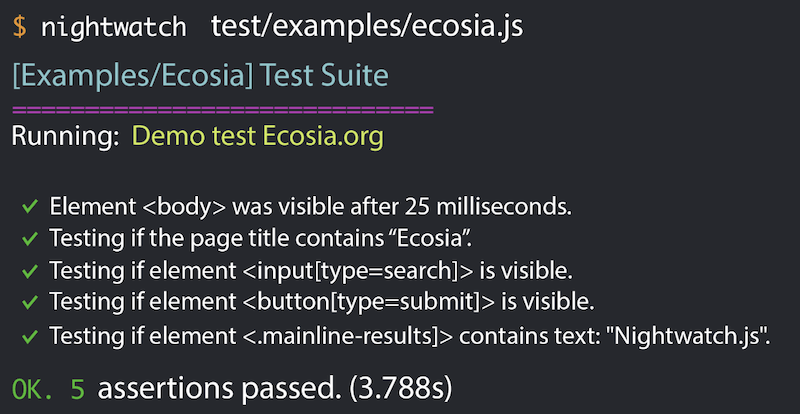

Automating Frontend Testing with Nightwatch.js

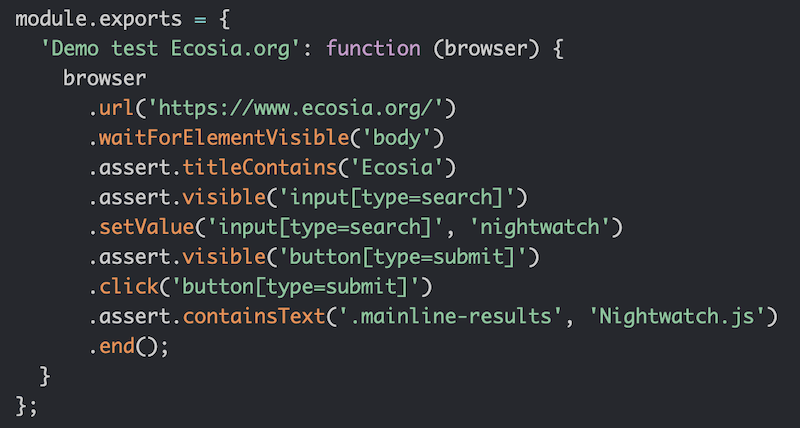

Nightwatch.js provides a DevOps-friendly frontend testing framework — tests are easy to write, and everyone on the team can read them without training. Developers write tests as code is created, quality engineers use and improve them, and even product managers will be comfortable capturing key user stories as formal tests.

Nightwatch.js tests run in a real headless Chromium browser, with the same DOM and Javascript engine used by Chrome — Firefox, Microsoft Edge, and Safari drivers are also supported. Developers can test on their laptops using Docker containers, and CI/CD testing can employ a variety of operating systems and browser versions.

After all this investment in mature frontend tests, why are Ops teams failing to use them in production?

Production Frontend Testing with Prometheus.io

Prometheus.io has become the de-facto cloud-native monitoring tool, but it’s also powerful in SMB and enterprise environments. Can you find Prometheus’ white-on-red torch in the “Where’s Waldo” of the Cloud Native Computing Foundation’s landscape?

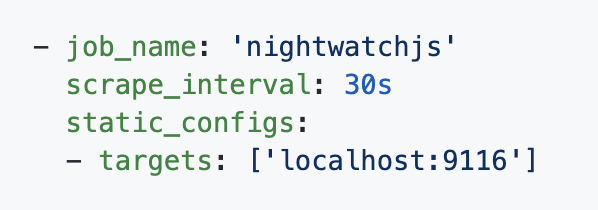

For data collection, Prometheus depends on exporters that provide correctly-formatted metrics. Good examples are the node_exporter for system data, MySQL stats exporter, and the HAProxy exporter.

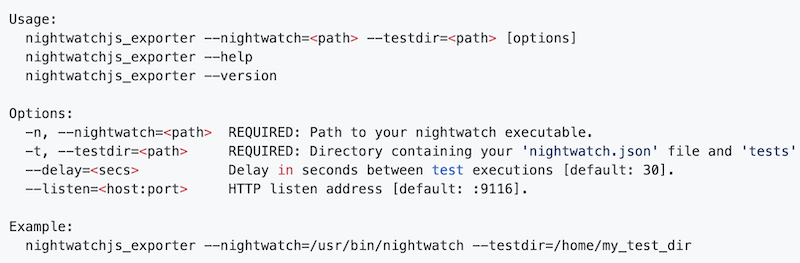

To tie Nightwatch.js and Prometheus together for use in production, I wrote nightwatchjs_exporter. It does two things:

- Runs Nightwatch.js tests on a schedule.

- Exports test results in Prometheus format.

https://github.com/nmcclain/nightwatchjs_exporter

Using nightwatch_exporter

Feel free to skip this section if you’re not interested in technical details!

Gorey implementation details are provided in the README, but this overview will give you a feel for how things fit together:

- nightwatchjs_exporter executes a set of Nightwatch.js tests on a regular schedule, storing the test results.

- Prometheus scrapes the test results from nightwatchjs_exporter on a regular schedule, again storing the test results for analysis and alerting.

This decoupled approach follows the standard Prometheus exporter architecture. It ensures that the monitoring server’s performance isn’t impacted by frontend test execution.

Not a Replacement for Wholistic Observability

The technique of applying frontend tests in production can be a super-power for startups and B2B applications — but it’s not a replacement for truly capturing each user’s actual experience.

Whether you call it Real User Monitoring (RUM), high-cardinality analysis, or traditionally, centralized event logging, this part of observability is perhaps the most valuable. I’m an advocate of retaining these events for at least 15 months so you can compare user experiences against “this time last quarter.”

At massive scale, using frontend tests for monitoring starts to lose value. With hundreds of thousands of daily users, canary tests and high-cardinality observability tools can offer deeper insight than independent browser tests.

I encourage you to check out nightwatchjs_exporter and start leveraging frontend tests in your production environment!

Found a bug innightwatchjs_exporter? Want to contribute? Open an issue!

Looking for help with your observability strategy? I'm focused where software, infrastructure, and data meets security, operations, and performance. Follow or DM me on twitter at @nedmcclain.